Imagine having a chatbot that’s not just smart, but also gets your site’s vibe. That’s what custom GPTs are all about. It’s like giving your website its very own voice – cool, right? In this world where everyone craves something unique, customizing a GPT for your website isn’t just cool, it’s absolutely epic!

Thinking of jazzing up your website’s chat experience? Or maybe you’re eyeing to be the next big hit in the ChatGPT store? Awesome, you’ve landed in the perfect spot.

Discover the surprisingly simple journey of how to create custom GPTs from a URL, transforming your website’s interface into a dynamic, user-friendly conversation hub.

Let’s dive in

1 Overview of Custom GPTs

Custom GPTs are a revolutionary advancement in the realm of digital communication and automation. At their core, they are tailored versions of AI-powered chatbots, designed to meet specific needs of websites and applications. These custom models are more than just tools; they’re game-changers, enabling businesses and developers to provide unique, personalized experiences for their users.

The primary benefit of these custom GPTs is their versatility. They can be integrated into various platforms, offering answers, guidance, or even coding assistance based on the specific knowledge they’ve been trained on. This customization results in a more efficient, user-friendly experience, directly addressing the unique queries of each user.

For a more in-depth understanding of how you can create your own Custom GPT, don’t miss our detailed guide about creating GPTs. It’s packed with insights and step-by-step instructions to help you harness the power of custom AI for your specific needs.

Now, let’s delve deeper into the heart of this process: GPT Crawler. This open-source solution is not just a tool; it’s a gateway to endless possibilities in the world of custom AI.

2 GPT Crawler: The Open-Source Solution

After exploring the overarching concept of custom GPTs, it’s time to dive into the heart of the process: the GPT Crawler. This tool is not just a component in the creation of custom GPTs; it’s a cornerstone of innovation and flexibility in AI technology.

What is GPT Crawler?

Simply put, GPT Crawler is an open-source project that allows you to create a custom GPT by crawling specific websites. It transforms the content of these sites into a knowledge base, which then powers your customized GPT model.

Key Features and Capabilities

- Customization: You can set it to crawl any site by specifying the URL.

- Depth of Knowledge: The crawler meticulously gathers information from the specified pages, ensuring a rich and detailed knowledge base.

- Flexibility: It’s adaptable to various types of content, capable of handling both public and client-side rendered information.

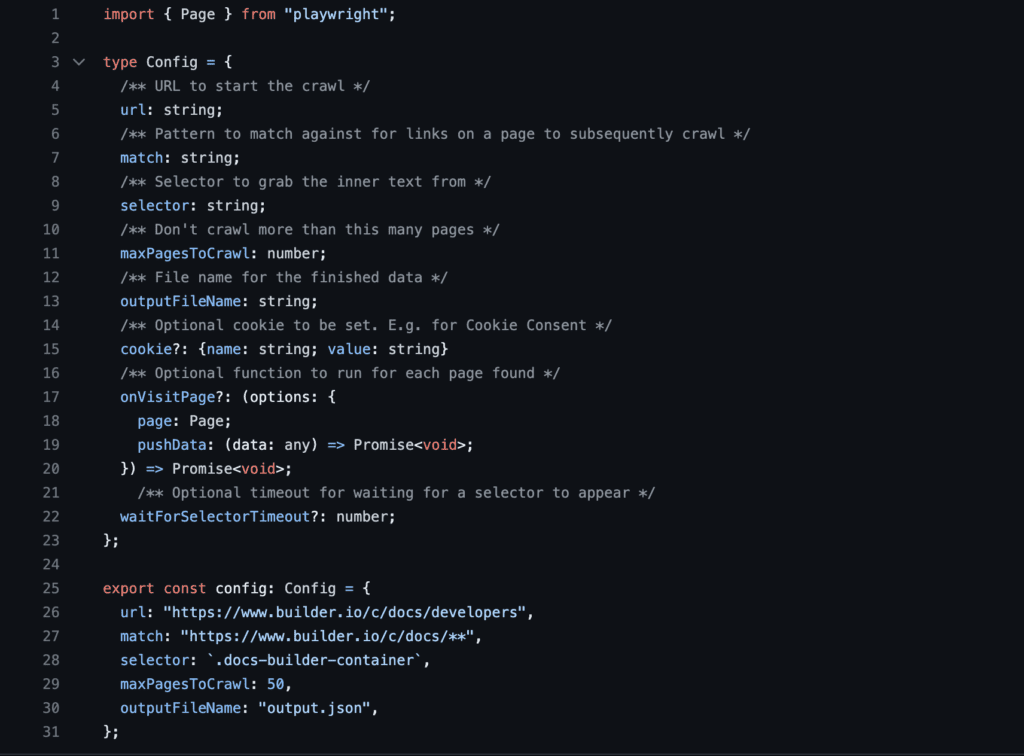

- User-Friendly Configuration: With simple configurations in the

config.tsfile, you can easily set parameters like the URL to crawl, match patterns, and page limits. - Efficiency: The crawler uses a headless browser, making it efficient and capable of including dynamic web content.

Community and Open-Source Aspect

The beauty of GPT Crawler lies in its open-source nature. This means:

- Continuous Improvement: Being open-source, it’s constantly evolving with contributions from developers around the world.

- Community Driven: Users can suggest improvements or add new features, enhancing its utility and performance.

- Transparency: You have complete visibility into how the tool works and can modify it to suit your specific needs.

3 Setting Up GPT Crawler

Now that we understand the significance of custom GPTs and the role of the GPT Crawler, let’s walk through the initial setup process. Setting up GPT Crawler is straightforward and involves a few key steps:

Initial Setup

- Cloning the Repository:

- The first step is to obtain the GPT Crawler code. This is done by cloning the repository from GitHub. Use the command

git clone https://github.com/builderio/gpt-crawlerto get the necessary files onto your local machine.

Installing Dependencies:

Once you have the repository, navigate into the project directory (cd gpt-crawler) and install the required dependencies.

Running npm install in your terminal will set up everything you need to get started.

Configuring the Crawler

With the initial setup complete, the next step is to configure the crawler to suit your specific needs:

- Config.ts File:

- The configuration of the crawler is managed in the

config.tsfile. This is where you tailor the crawler to target the specific content you want your custom GPT to learn from.

- The configuration of the crawler is managed in the

- Defining Crawl Criteria:

- In the

config.tsfile, specify the base URL – the starting point of your crawl. For example, to crawl a documentation site, you would set the URL to the main docs page. - Set up the matching pattern to determine which links on the site should be followed. This ensures that the crawler stays focused on relevant content.

- Specify the selector for scraping the content. This helps in extracting the right text from the pages.

- You can also limit the number of pages to crawl by setting

maxPagesToCrawl, ensuring efficiency and focus.

- In the

By following these steps, you’ll have your GPT Crawler configured and ready to compile the information needed for your custom GPT.

4 Running the Crawler

Having set up and configured the GPT Crawler, we now move to the vital stage of executing the crawl. This process is where the crawler gathers the data that will empower your custom GPT with specific knowledge.

Execution Process

- Running the Crawler:

- To start the crawler, simply run

npm startin your terminal. This command activates the crawler and begins the process of scanning the pages of your specified URL. - It’s a real-time operation, meaning you can observe the crawler as it navigates through the web pages, meticulously gathering information.

- To start the crawler, simply run

- Mechanism of Headless Browser Operation:

- The GPT Crawler operates using a headless browser. This means it can access and render content from web pages just like a regular browser, but without a graphical user interface.

- This capability is crucial for including content that is client-side rendered, ensuring a comprehensive crawl that captures even the most dynamic elements of a website.

Output Generation

- Understanding the output.json File:

- Once the crawl is complete, the crawler generates an ‘output.json’ file. This file contains the titles, URLs, and the extracted text from all the crawled pages. It’s the condensed essence of your targeted web content, ready to be transformed into a knowledge base for your GPT.

- Once the crawl is complete, the crawler generates an ‘output.json’ file. This file contains the titles, URLs, and the extracted text from all the crawled pages. It’s the condensed essence of your targeted web content, ready to be transformed into a knowledge base for your GPT.

[

{

"title": "GPTs and Custom Actions - gptpluginz.com",

"url": "https://gptpluginz.com/gpts-and-custom-actions/",

"html": "..."

},

{

"title": "5 Custom GPTs For Seo - gptpluginz.com",

"url": "https://gptpluginz.com/gpts-for-seo/",

"html": "..."

},

...

]

- Significance of the Crawled Data:

- The data collected by the crawler is not just a random aggregation of information. It’s a carefully curated set of content that directly relates to your chosen domain. This data forms the backbone of your custom GPT, enabling it to provide specialized, accurate responses based on the crawled content.

5 Integration and Usage of Custom GPT

Transitioning from the creation and running of the GPT Crawler, we now focus on how to integrate and utilize the custom GPT that has been tailored with your specific data.

UI and API Access Methods

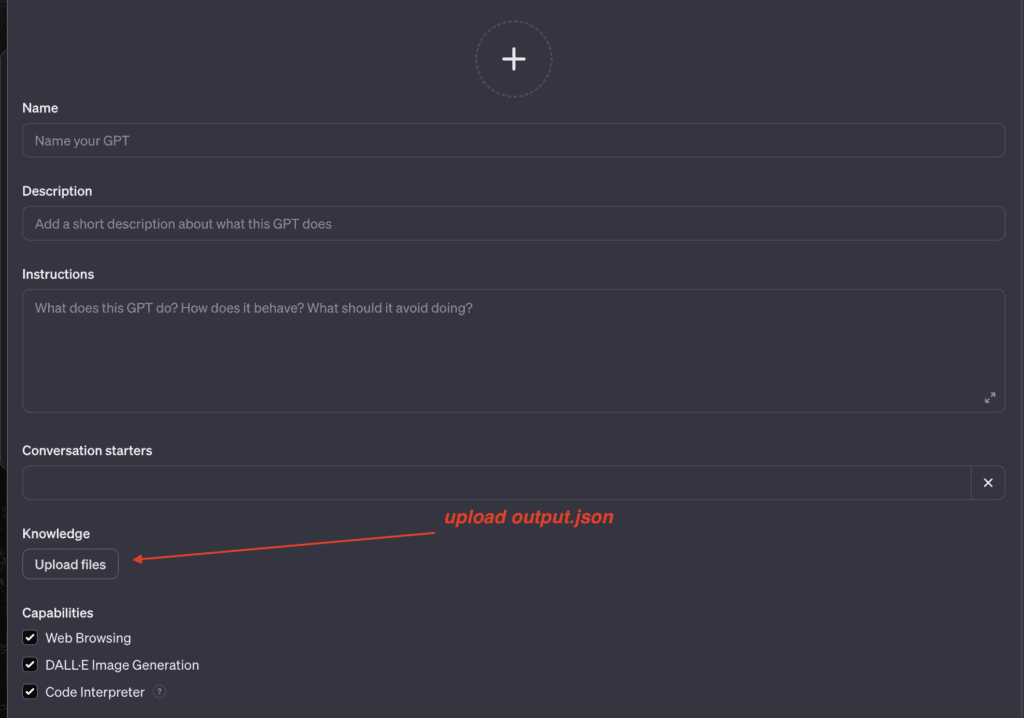

- Integration via UI:

- Once you have your

output.jsonfile from the GPT Crawler, you can directly upload this to ChatGPT. This process involves creating a new GPT, configuring it with your file, and thus equipping it with the knowledge from your crawled pages. It’s a straightforward method that allows you to interact with your custom GPT through a user-friendly interface. - This approach is ideal for those looking to quickly deploy a custom GPT for general use or for testing purposes.

- Once you have your

- Integrating with Products through API:

- For a more integrated solution, especially in product development, the OpenAI API offers a robust pathway. Here, you create a new assistant and upload your generated file.

- This method gives you API access to your custom GPT, allowing you to embed it seamlessly into your products or services. It’s an excellent choice for providing custom-tailored assistance within your digital offerings, ensuring that the GPT aligns perfectly with the specific knowledge about your product or content.

Read More : OpenAI Assistants API : How To Get Started?

Practical Applications

- Case Study: gptpluginz.com:

- As a practical example, consider our website, gptpluginz.com. By using the GPT Crawler, we created a custom GPT specifically designed to assist with queries about our Blog.

- This custom GPT not only enhances user experience on our site but also serves as a demonstration of the practical application of this technology in a real-world scenario.

In summary, the integration and usage of custom GPTs, whether through UI or API, open up a world of possibilities for enhancing user interaction and product integration. The case of gptpluginz.com illustrates just one of the many ways this technology can be applied to provide bespoke solutions in the digital space.

6 Conclusion

The journey of creating a custom GPT using the GPT Crawler showcases the power and flexibility of AI in today’s digital landscape. Whether for enhancing website interactivity, providing detailed product information, or answering complex queries, the potential applications are vast.

The process of setting up, running, and integrating the GPT Crawler into various platforms demonstrates the simplicity and effectiveness of custom AI solutions.

We encourage you to explore this tool for your unique needs. The potential to innovate and improve user engagement is immense, and we look forward to seeing the diverse and creative applications that emerge from this technology.

Read More :

Botpress : How To add GPTs To Any Website

Discussion about this post